April 15, 2021

Here is the article.

I like to spend 10 minutes to go over the article quickly.

- DRAM - Dynamic random access memory (DRAM) is a type of semiconductor memory that is typically used for the data or program code needed by a computer processor to function. DRAM is a common type of random access memory (RAM) that is used in personal computers (PCs), workstations and servers.

Redis Enterprise Cluster Architecture

A cluster, in Redis Enterprise terms, is a set of cloud instances, virtual machine/container nodes, or bare-metal servers that let you create any number of Redis databases in a memory/storage pool shared across the set. The cluster doesn’t need to scale up/out (or down/in) whenever a new database is created or deleted. A scaling operation is triggered only when one of the predefined limit thresholds has been reached, such as: memory, CPU, network, storage IOPS, etc.

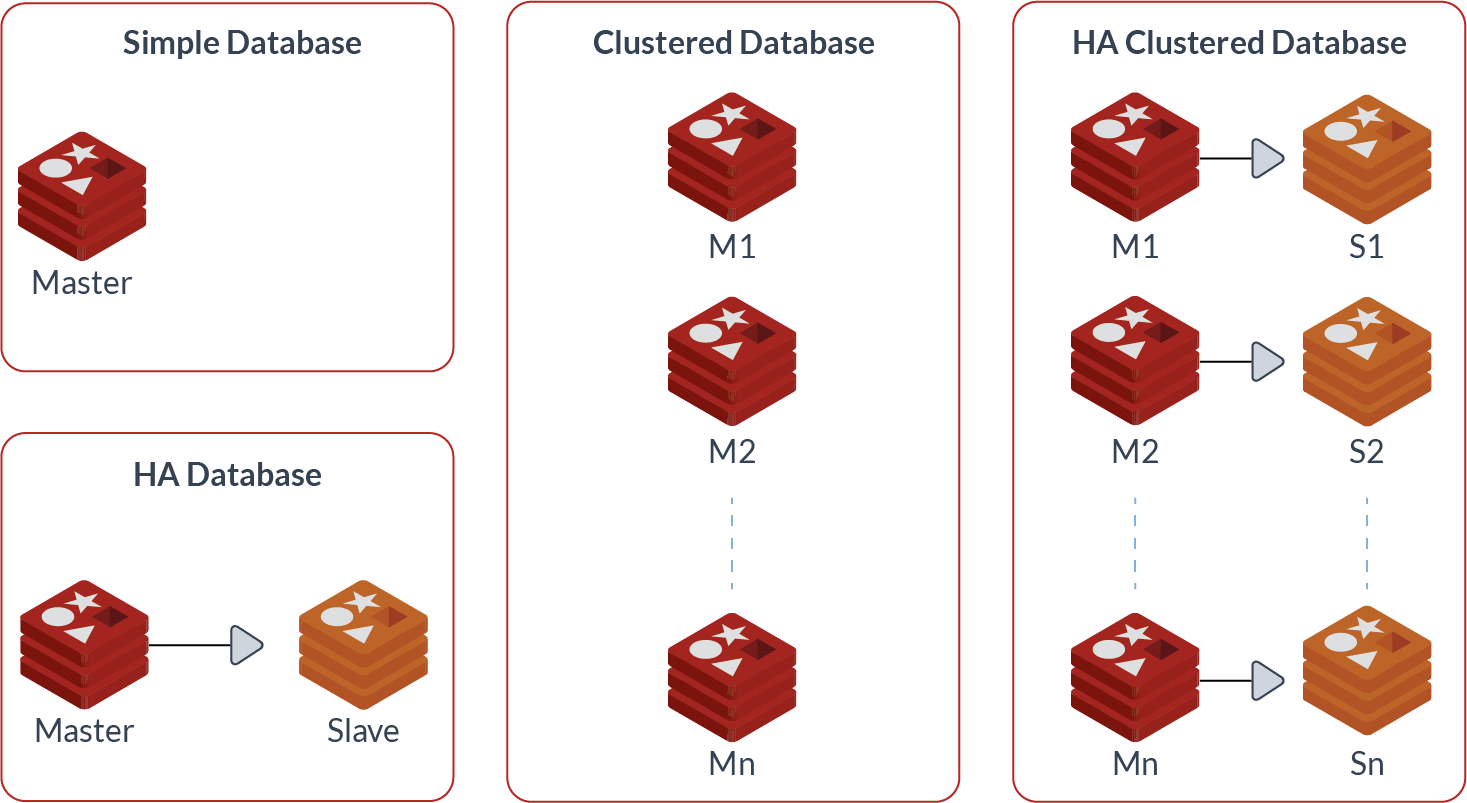

At any given time, a Redis Enterprise cluster node can include between zero and a few hundred Redis databases in one of the following types:

- A simple database, i.e. a single master shard

- A highly available (HA) database, i.e. a pair of master and slave shards

- A clustered database, which contains multiple master shards, each managing a subset of the dataset (or in Redis terms, a different range of “hash-slots”)

- An HA clustered database, i.e. multiple pairs of master/slave shards

Each database can be built in several forms:

- A Redis on DRAM database

- A Redis on Flash database, in which Flash (SSD or persistent memory) is used as a DRAM extender

- A memcached database (in reality, a Redis on DRAM database, accessible through the memcached protocol)

Each database can be accessed in multiple ways:

- Database endpoint: Simply connect your application to your database endpoint, and Redis Enterprise will transparently handle all the scaling and failover operations.

- Sentinel API: Use sentinel protocol to connect to the right node in the cluster in order to access your database.

- OSS Cluster API: Use the cluster API to directly connect to each shard of your cluster without any additional hops.

Multiple databases from different applications and users can run on the same Redis Enterprise cluster and node while being fully isolated.

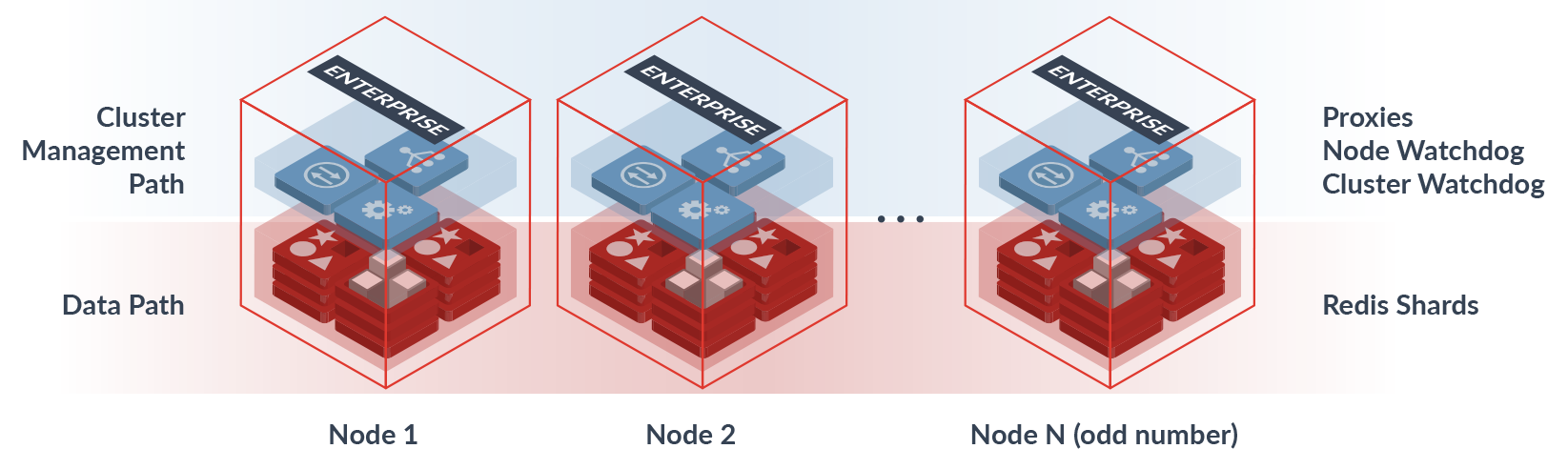

Shared-nothing, linearly scalable, multi-tenant, symmetric architecture

Redis Enterprise Cluster

Redis Enterprise cluster is built on a complete separation between the data-path components (i.e proxies and shards) and the control/management path components (i.e. cluster-management processes), which provides a number of important benefits:

- Performance: Data-path entities need not deal with control and management duties. The architecture guarantees that any processing cycles are dedicated to serving users’ requests, which improves the overall performance. For example, each Redis shard in a Redis Enterprise cluster works as if it were a standalone Redis instance. The shard doesn’t need to monitor other Redis instances, has no need to deal with failure or partition events, and is unaware of which hash-slots are being managed.

- Availability: The application continues to access data from its Redis database, even as sharding, re-sharding, and re-balancing takes place. No manual changes are needed to ensure data access.

- Security: Redis Enterprise prevents configuration commands from being executed via the regular Redis APIs. Any configuration operation is allowed through a secure UI, CLI,or API interface that follows role-based authorization controls. The proxy-based architecture ensures that only certified connections can be created with each shard, and only certified requests can be received by Redis shards.

- Manageability: Database provisioning, configuration changes, software updates, and more are done with a single command (via UI or API) in a distributed manner and without interrupting user traffic.

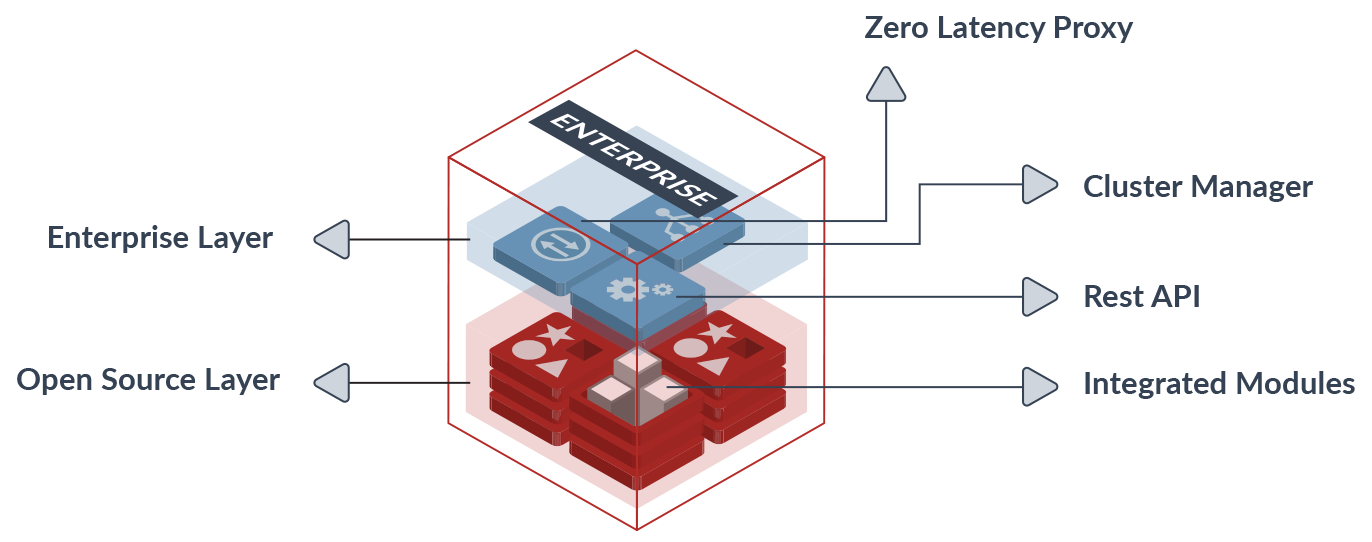

Redis Enterprise cluster components

Redis Enterprise cluster is built on a symmetric architecture, with all nodes containing the following components:

- Redis shard: An open source Redis instance with either a master or slave role that is part of the database.

- Zero-latency proxy: The proxy runs on each node of the cluster, is written in C, and based on a cut-through, multi-threaded, lock-free stateless architecture. The proxy handles the following primary functionalities:

- hides cluster complexity from the application/user

- maintains the database endpoint

- requests forwarding

- supports the memcached protocol

- manages data encryption through SSL

- provides strong, client-based SSL authentication

- enables Redis acceleration through pipelining and connection management

- Cluster manager: This component contains a set of distributed processes that together manage the entire cluster lifecycle. The cluster manager entity is completely separated from the data path components (proxies and Redis shards) and has the following responsibilities:

- database provisioning and de-provisioning

- resource management

- watchdog processes

- auto-scaling

- re-sharding

- re-balancing

- Secure REST API: All the management and control operations on the Redis Enterprise cluster are performed through a dedicated and secure API that is resistant to attacks and provides better control of cluster admin operations. One of the main advantages that this interface provides is the ability to provision and de-provision Redis resources at a very high rate, and with almost no dependency on the underlying infrastructure. This makes it very suitable for the new generation of microservices-based environments.

No comments:

Post a Comment